These people can use this particular prototype for self -navigating their way. It runs at the highest measured floating-point operation speed, which indicates that the network is more successful when applying GPU resources [. More sensors will be associated with it to detect, for example, downstairs and other trajectories, giving a wider range of assistance to the visually impaired. Mocanu, B.; Tapu, R.; Zaharia, T. DEEP-SEE FACE: A Mobile Face Recognition System Dedicated to Visually Impaired People. Object Detection Featuring 3D Audio Localization for Microsoft HoloLensA Deep Learning based Sensor Substitution Approach for the Blind. You seem to have javascript disabled. The model is also trained to perform banknote detection and recognition to help in daily business transaction-related activities along with other object detection and navigation assistance for visually impaired people. Augmentation and manual annotation are performed on the dataset to make the system robust and free from overfitting. Confusion matrix is another parameter that can be utilized to check the performance of object detection and recognition on a set of test data whose true values are known. The device is programmed to work in a fully automatic manner to perform object recognition and obstacle detection. Kim, J.; Lee, J.K.; Lee, K.M. Video Technol. A deep-learning model is trained with multiple images of objects that are highly relevant to the visually impaired person. ; Oliva, A.; Torralba, A.

In Proceedings of the 2006 International Conference of the IEEE Engineering in Medicine and Biology Society, New York, NY, USA, 30 August3 September 2006; pp. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June1 July 2016; pp. [, Parikh, N.; Shah, I.; Vahora, S. Android Smartphone Based Visual Object Recognition for Visually Impaired Using Deep Learning. and M.K.D., methodology, R.C.J., experimental setup, R.C.J., S.Y. The Feature Paper can be either an original research article, a substantial novel research study that often involves Katzschmann, R.K.; Araki, B.; Rus, D. Safe Local Navigation for Visually Impaired Users with a Time-of-Flight and Haptic Feedback Device. They experienced lots of problems while using the stick in crowed areas. A novel approach to vision enhancement for people with severe visual impairments that utilizes computer vision techniques to classify scene content so that visual enhancement of the scene can identify semantically important concepts. 3 0 obj

Thus, the information transmission time will increase with an increase in the number of the objects in current image frame and cause a delay to processing the next frame. The accuracy of the proposed system in object detection and recognition is 99.31% and 98.43% respectively. A YOLO (You look only once) algorithm is used in our project. In Proceedings of the 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Diego, CA, USA, 28 August1 September 2012; pp. Once the images were annotated, the respective annotation files were also generated.  ; Aftab, A.; Perla, F.; Bernstein, M.J.; Yang, Y. LIDAR Assist Spatial Sensing for the Visually Impaired and Performance Analysis. Echolocation [. Di, P.; Hasegawa, Y.; Nakagawa, S.; Sekiyama, K.; Fukuda, T.; Huang, J.; Huang, Q. We use cookies on our website to ensure you get the best experience. MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

; Aftab, A.; Perla, F.; Bernstein, M.J.; Yang, Y. LIDAR Assist Spatial Sensing for the Visually Impaired and Performance Analysis. Echolocation [. Di, P.; Hasegawa, Y.; Nakagawa, S.; Sekiyama, K.; Fukuda, T.; Huang, J.; Huang, Q. We use cookies on our website to ensure you get the best experience. MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Images of objects that are highly relevant in the lives of the visually challenged are trained using deep learning neural networks. Refreshable braille displays, screen magnifiers, and screen readers are also used to obtain information while using computer or mobile systems. However, the effective utilization of sensor-based technologies and computer vision could result in a highly efficient and supportive device, to make them aware of the surroundings. You Only Look Once: Unified, Real-Time Object Detection. Thus, the time taken to prompt person five times is reduced to 5 person. Patil, K.; Jawadwala, Q.; Shu, F.C. In the case of assistance for visually impaired people, the frame processing time also includes the time necessary to convey detection information as audio or vibrations. [, Tekin, E.; Coughlan, J.M. Future work will focus on the inclusion of more objects in the dataset, which can make the dataset more efficient for the assistance of visually impaired people. It can also easily differentiate between objects and obstacles coming in front of the camera. The whole set-up is implemented in the single board DSP processor and has specifications of 64-bit, quad-core, and 1.5 GHz, as well as 4 GB SDRAM. 2 0 obj

endobj

Images of objects that are highly relevant in the lives of the visually challenged are trained using deep learning neural networks. Refreshable braille displays, screen magnifiers, and screen readers are also used to obtain information while using computer or mobile systems. However, the effective utilization of sensor-based technologies and computer vision could result in a highly efficient and supportive device, to make them aware of the surroundings. You Only Look Once: Unified, Real-Time Object Detection. Thus, the time taken to prompt person five times is reduced to 5 person. Patil, K.; Jawadwala, Q.; Shu, F.C. In the case of assistance for visually impaired people, the frame processing time also includes the time necessary to convey detection information as audio or vibrations. [, Tekin, E.; Coughlan, J.M. Future work will focus on the inclusion of more objects in the dataset, which can make the dataset more efficient for the assistance of visually impaired people. It can also easily differentiate between objects and obstacles coming in front of the camera. The whole set-up is implemented in the single board DSP processor and has specifications of 64-bit, quad-core, and 1.5 GHz, as well as 4 GB SDRAM. 2 0 obj

endobj

Currency notes also feature the tactile marks with raised dots to allow the person to identify the banknote. 51815184. Once the performance testing is complete, the trained model is loaded onto a small DSP processor and equipped with ultrasonic sensors to detect the obstacles. The proposed assistive system gives more information with higher accuracy in real time for visually challenged people. If an object is detected in the captured image frame, equivalent audio is played after the detection of an object to convey information to the user. [, Lin, T.-Y. Yang, K.; Bergasa, L.M. published in the various research areas of the journal. The block diagram for proposed methodology is represented in, Many datasets are available for object detection, such as PASCAL [, All collected images were then augmented to resist the trained model from overfitting and to perform more robust and accurate object detection for visually impaired persons. A hardware-based robotic cane is proposed for assistance in walking. The system is made for hundred objects of different classes. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 2025 June 2009; pp. A 68-mw 2.2 Tops/w Low Bit Width and Multiplierless DCNN Object Detection Processor for Visually Impaired People. ; Shu, C. An Astute Assistive Device for Mobility and Object Recognition for Visually Impaired People. The proposed methodology can make a significant contribution to assist visually impaired people compared to previously developed methods, which were only focused on obstacle detection and location tracking with the help of basic sensors without use of deep learning. The text reader mode can be used efficiently where the user has a necessity to read, such as when reading a book, a restaurant menu, etc. ; Das, A.; Jonas, J.B.; Keeffe, J.; Kempen, J.H.

Meshram, V.V. Various augmentation techniques, such as rotation at different angles, skewing, mirroring, flipping, brightness levels, noise levels, and a combination of these techniques, was used to enrich the dataset to many folds, shown in. Aladrn, A.; Lopez-Nicolas, G.; Puig, L.; Guerrero, J.J. Navigation Assistance for the Visually Impaired Using RGB-D Sensor with Range Expansion. The proposed system can work universally in the existing infrastructure which has been used before by visually impaired people. B-Tech Electronics and Communication Engineering, Amal Jyothi College of Engineering, Kanjirappally, Kerala, India, https://doi.org/10.1088/1757-899X/1085/1/012006. <>

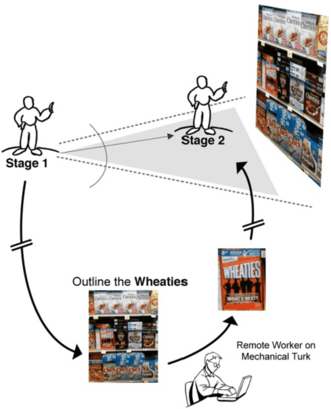

Frame processing time in blind assistive devices is different for a normal human and visually impaired persons. Darknet-53 is used as a feature extractor in YOLO-v3 that is composed of 53 convolutional layers. Centre for Advanced Studies, Dr. A.P.J. A new method to classify clothes patterns into 4 categories: stripe, lattice, special, and patternless, and develops a new feature combination scheme based on the confidence margin of a classifier to combine the two types of features to form a novel local image descriptor in a compact and discriminative format. If no objects are identified in present frame, then it takes input from an ultrasonic sensor regarding the distance from the object, and if the calculated distance is less than the threshold, then it treats object as an obstacle and warns the person through an auditory message, as shown in flow chart in, Different modes are designed in the device to provide wider assistance such as indoor, outdoor or text-reader mode. Cifar-10 Image Classification with Convolutional Neural Networks for Embedded Systems. An Indoor and Outdoor Navigation System for Visually Impaired People. View 2 excerpts, references background and methods. The testing accuracy and processing time for a single image frame are given in, Information optimization is performed to get more information in a shorter time duration. A machine learning approach for visual object detection which is capable of processing images extremely rapidly and achieving high detection rates and the introduction of a new image representation called the "integral image" which allows the features used by the detector to be computed very quickly. and C.M.T.-G. All authors have read and agreed to the published version of the manuscript. Visually impaired people face numerous difficulties in their daily life, and technological interventions may assist them to meet these challenges. A fast and stable algorithm for detecting the location of a pedestrian crossing using an image captured by a single camera is presented through bipolarity-based segmentation and projective invariant-based recognition. The rest of the paper is organized as follows: First, the methodology is explored in, In this section, the whole process is explained to provide navigation assistance to visually impaired people, which consists of the preparation and pre-processing of the dataset, augmentation, annotation and dataset training on the deep-learning model.

Meshram, V.V. Various augmentation techniques, such as rotation at different angles, skewing, mirroring, flipping, brightness levels, noise levels, and a combination of these techniques, was used to enrich the dataset to many folds, shown in. Aladrn, A.; Lopez-Nicolas, G.; Puig, L.; Guerrero, J.J. Navigation Assistance for the Visually Impaired Using RGB-D Sensor with Range Expansion. The proposed system can work universally in the existing infrastructure which has been used before by visually impaired people. B-Tech Electronics and Communication Engineering, Amal Jyothi College of Engineering, Kanjirappally, Kerala, India, https://doi.org/10.1088/1757-899X/1085/1/012006. <>

Frame processing time in blind assistive devices is different for a normal human and visually impaired persons. Darknet-53 is used as a feature extractor in YOLO-v3 that is composed of 53 convolutional layers. Centre for Advanced Studies, Dr. A.P.J. A new method to classify clothes patterns into 4 categories: stripe, lattice, special, and patternless, and develops a new feature combination scheme based on the confidence margin of a classifier to combine the two types of features to form a novel local image descriptor in a compact and discriminative format. If no objects are identified in present frame, then it takes input from an ultrasonic sensor regarding the distance from the object, and if the calculated distance is less than the threshold, then it treats object as an obstacle and warns the person through an auditory message, as shown in flow chart in, Different modes are designed in the device to provide wider assistance such as indoor, outdoor or text-reader mode. Cifar-10 Image Classification with Convolutional Neural Networks for Embedded Systems. An Indoor and Outdoor Navigation System for Visually Impaired People. View 2 excerpts, references background and methods. The testing accuracy and processing time for a single image frame are given in, Information optimization is performed to get more information in a shorter time duration. A machine learning approach for visual object detection which is capable of processing images extremely rapidly and achieving high detection rates and the introduction of a new image representation called the "integral image" which allows the features used by the detector to be computed very quickly. and C.M.T.-G. All authors have read and agreed to the published version of the manuscript. Visually impaired people face numerous difficulties in their daily life, and technological interventions may assist them to meet these challenges. A fast and stable algorithm for detecting the location of a pedestrian crossing using an image captured by a single camera is presented through bipolarity-based segmentation and projective invariant-based recognition. The rest of the paper is organized as follows: First, the methodology is explored in, In this section, the whole process is explained to provide navigation assistance to visually impaired people, which consists of the preparation and pre-processing of the dataset, augmentation, annotation and dataset training on the deep-learning model.

Recording playback speed is increased to the extent that it still sounds clear and understandable. This issue can be easily eliminated by increasing the threshold value or by considering only the highest label prediction probability.

Please note that many of the page functionalities won't work as expected without javascript enabled. Feature Papers represent the most advanced research with significant potential for high impact in the field. When the calculated distance through the ultrasonic sensor is below the threshold value, the device makes an acoustic warning or vibrates, but it can be irritating for a visually impaired person who is standing in a crowd and repeatedly listening same prompt or continuous vibrations. The remaining 500 images from each training class were divided into a ratio of 7:3 for training and validation set, respectively. Eng. Regarding input signal observation, 98% responded that they have heard the sound appropriately and the remaining 2% of individuals missed hearing the signal. This project changes the visual world into an audio world by informing the visually impaired of the objects in their environment. An automated common object and currency recognition system can improve the safe movement and transaction activity of visually impaired people. ; Ali, M.S. those of the individual authors and contributors and not of the publisher and the editor(s). Sci. The prototype consists of several modules. and C.M.T.-G.; supervision, M.K.D. This research was funded by the Grants from Department of Science and Technology, Government of India, grant number SEED/TIDE/2018/6/G.

You must have JavaScript enabled in your browser to utilize the functionality of this website. All articles published by MDPI are made immediately available worldwide under an open access license. While in outdoor environments, trained objects such as cars, humans, and vehicles were used. Chang, W.-J. A system to identify products in their everyday routine by this system consists of a camera, a speaker and an image processing system that tries to detect the object and transform that object into the audio form and inform blind person about those objects. Deep learning-based object detection, in assistance with various distance sensors, is used to make the user aware of obstacles, to provide safe navigation where all information is provided to the user in the form of audio.

To optimize this, the object counter is added with a trained model that counts the number of objects of same category in current image frame, processes it, and conveys a piece of audio information with both number of objects and name/label of an object. Bhatlawande, S.; Mahadevappa, M.; Mukherjee, J.; Biswas, M.; Das, D.; Gupta, S. Design, Development, and Clinical Evaluation of the Electronic Mobility Cane for Vision Rehabilitation. Semantic Scholar is a free, AI-powered research tool for scientific literature, based at the Allen Institute for AI. 12. As the model is trained with most of those objects that it comes across in daily life, there is a smaller probability that the ultrasonic sensor will be used, apart from a case where the user is within a closed space with a distance less than the threshold. A white cane is used by visually impaired people around the world. ; Goh, P. Multi-Sensor Obstacle Detection System Via Model-Based State-Feedback Control in Smart Cane Design for the Visually Challenged. The proposed system is relatively less weighty than existing systems; hence, a person can carry the developed system easily.

Captured frames were pre-processed and fed into the trained model, and if any object which was trained with the model was detected, a bounding box was drawn around that object and a respective label was generated for that object. Rastogi, R.; Pawluk, T.V.D. To help the blind people the visual world has to be transformed into the audio world with the potential to inform them about objects. The confusion matrix is prepared for a threshold of 0.5; because of this, if the captured image is not proper, there may be chances that image shows some similarity with other banknotes along with the actual currency note.

If a user wants to record image frames, which came across the device, it can be stored in subsequent frames. This paper simplifies the mobility problems of the users by secure and safe movement in indoor and outdoor environments. Good vision is an expensive gift but now a days loss of vision is becoming common issue. This paper proposes an artificial intelligence-based fully automatic assistive technology to recognize different objects, and auditory inputs are provided to the user in real time, which gives better understanding to the visually impaired person about their surroundings. A flow chart diagram for the optimized information transmission with the object counter is shown in. In order to be human-readable, please install an RSS reader. To find out more, see our, Browse more than 100 science journal titles, Read the very best research published in IOP journals, Read open access proceedings from science conferences worldwide, Published under licence by IOP Publishing Ltd, Bundesanstalt fr Materialforschung und prfung (BAM), IOP Conference Series: Materials Science and Engineering, Fast and accurate obstacle detection of manipulator in complex humanmachine interaction workspace, Traffic vehicle cognition in severe weather based on radar and infrared thermal camera fusion, In object detection deep learning methods, YOLO shows supremum to Mask R-CNN, M-YOLO: A Nighttime Vehicle Detection Method Combining Mobilenet v2 and YOLO v3, Non-contact measurement of human respiration using an infrared thermal camera and the deep learning method, Detection and Content Retrieval of Object in an Image using YOLO, Head of Division "Thermographic Methods" (m/f/d), 13 positions for PhD candidates/research associates, Copyright 2022 IOP Annual International Conference on Emerging Research Areas on "COMPUTING & COMMUNICATION SYSTEMS FOR A FOURTH INDUSTRIAL REVOLUTION" (AICERA 2020) 14th-16th December 2020, Kanjirapally, India It maintains the balance of the person and reduces the risk of falling. We use cookies to help provide and enhance our service and tailor content and ads. People also make use of GPS-equipped assistive devices, which help with navigation and orientation for a particular position. Results for different object classes in different scenarios are shown below in, Different approaches for object classification and object detection were also tested in given datasets, such as VGG-16, VGG-19 and Alexnet. [. Everingham, M.; Van Gool, L.; Williams, C.K.I. 1 0 obj The proposed system uses Single Shot Detector (SSD) model with MobileNet and Tensorflow-lite to recognize objects along with the currency note in the real-time scenario in both indoor and outdoor environments. In addition, the proposed system sends all processed data to a remote server through IoT.

- Doctor Strange Collector Corps Box

- Anantara Vilamoura Purobeach

- Best Wooden Hair Brush

- Trek Verve+ 2 Lowstep Electric Bike 2021

- Sun Joe Mj401c Replacement Battery

object detection for visually impaired

You must be concrete block molds for sale to post a comment.