TransformerSupplier) is applied to each input record and Do let me know if you have any questions, comments or ideas for improvement. In this Kafka Streams Transformations tutorial, the `branch` example had three predicates: two filters for key name and one default predicate for everything else. You might also be interested in: Leveraging an event-driven architecture to build meaningful customer relationships. The provided As an aside, we discovered during testing that with enough concurrency, the writes to the outbox table would cause deadlocks in MySQL. You can create both stateless or stateful transformers. You may also be interested in: How we built our modern ETL pipeline. Before we go into the source code examples, lets cover a little background and also a screencast of running through the examples. KeyValue type in  How can we guarantee this when the database and our job queue can fail independently of each other? Should you have any feedback or doubts regarding this article you can share them via comments. In `groupBy` we deviate from stateless to stateful transformation here in order to test expected results. Feel free to play around with the code, add more payloads, modify aggregation logic. In the tests, we test for the new values from the result stream. record-by-record operation (cf. It will aggregate them as a:6 , b:9 , c:9 , then since b and c reached the cap, it will flush them down the stream from our transformer. The other initialization step is to set up a periodic timer (called a punctuation in Kafka Streams) which will call a method of ours that scans the queue from the top and flushes out any records (using ProcessorContext#forward()) that are due to be forwarded, then removes them from the state stores. It then uses Spring's TransactionSynchronization facility to trigger corresponding inserts to the outbox table right before a transaction is committed. https://kafka.apache.org/21/documentation/streams/developer-guide/dsl-api.html#applying-processors-and-transformers-processor-api-integration.

How can we guarantee this when the database and our job queue can fail independently of each other? Should you have any feedback or doubts regarding this article you can share them via comments. In `groupBy` we deviate from stateless to stateful transformation here in order to test expected results. Feel free to play around with the code, add more payloads, modify aggregation logic. In the tests, we test for the new values from the result stream. record-by-record operation (cf. It will aggregate them as a:6 , b:9 , c:9 , then since b and c reached the cap, it will flush them down the stream from our transformer. The other initialization step is to set up a periodic timer (called a punctuation in Kafka Streams) which will call a method of ours that scans the queue from the top and flushes out any records (using ProcessorContext#forward()) that are due to be forwarded, then removes them from the state stores. It then uses Spring's TransactionSynchronization facility to trigger corresponding inserts to the outbox table right before a transaction is committed. https://kafka.apache.org/21/documentation/streams/developer-guide/dsl-api.html#applying-processors-and-transformers-processor-api-integration.  Transformer (provided by the given How to add headers using KStream API (Java). The intention is to show creating multiple new records for each input record. provided KeyValueMapperand, Join records of this stream with KTable's records using non-windowed left equi The obvious approach of using a job queue would already give us this. In this overview, hell cover: When providing information about activities for display on our website, our frontend teams have a few requirements: The data must be quick to retrieve, ideally with a single request, Some calculation and aggregation should already be applied to the data. original stream based o, Set a new key (with possibly new type) for each input record. The outbox pattern is a good fit for this task. This is However we are also immediately deleting records from the table after inserting them, since we don't want the table to grow and the Debezium connector will see the inserts regardless. The filter` function can filter either a KTable or KStream to produce a new KTable or KStream respectively. The Transformer interface having access to a key-value store and being able to schedule tasks at fixed intervals meant we could implement our desired batching strategy. And I really liked the processor API!

Transformer (provided by the given How to add headers using KStream API (Java). The intention is to show creating multiple new records for each input record. provided KeyValueMapperand, Join records of this stream with KTable's records using non-windowed left equi The obvious approach of using a job queue would already give us this. In this overview, hell cover: When providing information about activities for display on our website, our frontend teams have a few requirements: The data must be quick to retrieve, ideally with a single request, Some calculation and aggregation should already be applied to the data. original stream based o, Set a new key (with possibly new type) for each input record. The outbox pattern is a good fit for this task. This is However we are also immediately deleting records from the table after inserting them, since we don't want the table to grow and the Debezium connector will see the inserts regardless. The filter` function can filter either a KTable or KStream to produce a new KTable or KStream respectively. The Transformer interface having access to a key-value store and being able to schedule tasks at fixed intervals meant we could implement our desired batching strategy. And I really liked the processor API!  Lets create a class CustomProcessor that will implement a Transformer

Lets create a class CustomProcessor that will implement a Transformer

It is recommended to watch the short screencast above, before diving into the examples. Also, we expect the updates to be in near real-time. `flatMap` performs as expected if you have used it before in Spark or Scala. Stateless transformations do not require state for processing. Lets create a message binding interface: Then assuming that you have Kafka broker running under localhost:9092 . Looks like youve clipped this slide to already. First we set up the data structures mentioned above. &stream-builder-${stream-listener-method-name} : More about this at https://cloud.spring.io/spring-cloud-static/spring-cloud-stream-binder-kafka/2.2.0.RC1/spring-cloud-stream-binder-kafka.html#_accessing_the_underlying_kafkastreams_object, Line 2: Get actual StreamBuilder from our factory bean, Line 3: Create StoreBuilder that builds KeyValueStore with String serde defined for its key, and Long serde defined for its value, Line 4: Add our newly created StoreBuilder to StreamBuilder. Then we have our service's Kafka consumer(s) work off that topic and update the cache entries. Enabling Insight to Support World-Class Supercomputing (Stefan Ceballos, Oak ksqlDB: A Stream-Relational Database System. `count` is a stateful operation which was only used to help test in this case. This kind of buffering and deduplication is not always trivial to implement when using job queues. These are the dependencies we need (in Gradle's build.gradle format): Our transformer implements the Transformer interface from kafka-streams, which allows stateful transformation of records from one Kafka topic to another. SlideShare uses cookies to improve functionality and performance, and to provide you with relevant advertising. How did we move the mountain? The problem was that MySQL was locking the part of the index where the primary key would go, holding up inserts from other transactions. The [Apache Kafka Meetup by Confluent] Graph-based stream processing, ksqlDB , Kafka Streams State Stores Being Persistent, Sources Sinks Confluent Cloud , Serverless Stream Processing with Bill Bejeck, Stream Processing Confluent Cloud , Understanding Apache Kafka Latency at Scale, Be A Great Product Leader (Amplify, Oct 2019), Trillion Dollar Coach Book (Bill Campbell). Copyright 2011-2021 Javatips.net, all rights reserved. GetYourGuide is the booking platform for unforgettable travel experiences. In our case the value is a string of comma-separated language codes, so our merge function will return a string containing the union of the old and new language codes. The SlideShare family just got bigger. A font provides the, Allows reading from and writing to a file in a random-access manner. Streaming all over the world Real life use cases with Kafka Streams, Dr. Benedikt Linse, Senior Solutions Architect, Confluent

https://www.meetup.com/Apache-Kafka-Germany-Munich/events/281819704/, Learn faster and smarter from top experts, Download to take your learnings offline and on the go. Below is the code snippet using the transform() operator. Seems like we are done with our CustomProcessor (Github link to the repo is at the end of this article). Instant access to millions of ebooks, audiobooks, magazines, podcasts and more. You can flush key-value pairs in two ways: by using previously mentioned this.context.forward(key, value) call or by returning the pair in transform method. The state store is a simple key-value store that uses RocksDB which also (by default) persists data in an internal kafka topic. The topic names, Group the records by their current key into a KGroupedStream while preserving into zero or more value, Creates an array of KStream from this stream by branching the records in the Processor KSTREAM-TRANSFORM- has no access to StateStore counterKeyValueStore as the store is not connected to the processor

This way, we can retain consistency by writing data in a single transaction on only one data sourceno need to worry about whether our job queue is down at the moment.

Apache Kafka from 0.7 to 1.0, History and Lesson Learned. Batching write operations to a database can significantly increase the write throughput. Hope these examples helped. In this example, we use the passed in filter based on values in the KStream. Lets add another method called findAndFlushCandidates: When we call findAndFlushCandidates , it will iterate over our state store, check if the cap for a pair is reached, flush the pair using this.context.forward(key, value) call, and delete the pair from the state store. See our Privacy Policy and User Agreement for details. org.apache.kafka.streams.processor.Punctuator#punctuate(long). or join) is applied to the result Hinrik rn Sigursson is a senior backend engineer in the Catalog team. A state store instance is created per partition and can be either persistent or in-memory only. Here is the difference between them using a simple language. However, there were still a few concerns to be addressed: Decoupling: We want to perform the computation and cache update in a separate work stream from the one that responds to the update request. All of this happens independently of the request that modified the database, keeping those requests resilient. I do plan to cover aggregating and windowing in a future post. Also, using in-memory key-value stores meant that the Kafka Streams application left a minimal footprint on the Kafka cluster. Or, a certain amount of time had elapsed since the last update. From the Kafka Streams documentation, its important to note. Visitor Java class represents the input Kafka message and has JSON representation : VisitorAggregated Java class is used to batch the updates and has the JSON representation : The snippet below describes the code for the approach. Hello, today Im going to talk about this pretty complex topic of Apache Kafka Streams Processor API (https://docs.confluent.io/current/streams/developer-guide/processor-api.html). The Science of Time Travel: The Secrets Behind Time Machines, Time Loops, Alternate Realities, and More! If you continue browsing the site, you agree to the use of cookies on this website. A Kafka journey and why migrate to Confluent Cloud? text in a paragraph. KStream. Due to repartition, what was initially one single topology, is now broken into two sub-topologies and the processing overhead of writing to and reading from a Kafka topic is introduced, along with duplication of the source topic data. Personally, I got to the processor API when I needed a custom count based aggregation. We needed something above what the Kafka Streams DSL operators offered. All rights reserved. Building Large-Scale Stream Infrastructures Across Multiple Data Centers with Changing landscapes in data integration - Kafka Connect for near real-time da Real-time Data Ingestion from Kafka to ClickHouse with Deterministic Re-tries How Zillow Unlocked Kafka to 50 Teams in 8 months | Shahar Cizer Kobrinsky, Z Running Kafka On Kubernetes With Strimzi For Real-Time Streaming Applications. The state store will be created before we initialize our CustomProcessor , all we need is to pass stateStoreName inside it during initialization (more about it later). Culture & PeopleCustomer ServiceData Science Diversity & InclusionEngineering ManagementEventsFinance & LegalLeadershipMarketingProduct & DesignRecruiting & Talent DevelopmentRelocation 101Sales & SupplyTech & EngineeringWorking at GetYourGuide. Dr. Benedikt Linse. I also didnt like the fact that Kafka Streams would create many internal topics that I didnt really need and that were always empty (possibly happened due to my silliness). We are using a UUID as the primary key, which normally avoids this kind of lock contention since the distribution of new keys is pretty random across the index. Consistency: We want to guarantee that if our data is updated, its cached representation would also be updated. In our case, we will do the following: It will ask you to implement 3 methods from Transformer interface: We should implement init(ProcessorContext context) and keep context, furthermore we should also get a state store out of it. SlideShare uses cookies to improve functionality and performance, and to provide you with relevant advertising.

With an empty table, MySQL effectively locks the entire index, so every concurrent transaction has to wait for that lock.We got rid of this kind of locking by lowering the transaction isolation level from MySQL's default of REPEATABLE READ to READ COMMITTED. Ill try to post more interesting stuff Im working on. Transform each record of the input stream into zero or more records in the output stream (both key and value type Define following properties under application.properties : Should be pretty self-descriptive, but let me explain the main parts: Lets enable binding and create a simple stream listener that would print incoming messages: So far, so good! However, the result of aggregation stored in a. Attaching KeyValue stores to KafkaStreams Processor nodes and performing read/write operations. The Transformer interface is for stateful mapping of an input record to zero, one, or multiple new output records (both key and value type can be altered arbitrarily). Lets create a custom, stateful transformer that would aggregate certain letters, and as soon as it reaches a certain cap, it will flush aggregated values down the stream. The challenges we faced with a time-based windowing and groupByKey() + reduce() approach indicated that it was not the most ideal approach for our use case. Our service is written in Java, with Spring as the application framework and Hibernate as an ORM. Meet our team here and check out our open jobs on careers.getyourguide.com, GIVEAWAY ALERT Win an ultimate 48-hour Vatican experience for one lucky winner and a guest of their choice, Enter, Building A Career in UX and The Importance of Trusting our Instincts, Collaboration and Growth: 2022 Engineering Manager Summit, How the Coordination Team Keeps Recruitment Flowing. Using Kafka as a Database For Real-Time Transaction Processing | Chad Preisle ETL as a Platform: Pandora Plays Nicely Everywhere with Real-Time Data Pipelines. This ensures we only output at most one record for each key in any five-minute period. Datetime formatting i, [], String> uppercasedAndAnonymized = input, , edgesGroupedBySource.queryableStoreName(), localworkSetStoreName). You can find the complete working code here. We need an ordered queue to store the key of the record and the timestamp of when it is scheduled to be forwarded to the output topic (a TimestampedKeyValueStore). As a result, the Kafka Streams framework is forced to perform a repartition operation (similar to the shuffle step in the Map/Reduce paradigm). After records with identical keys are co-located to the same partition, aggregation is performed and results are sent to the downstream Processor nodes. Required fields are marked *. This will allow us to test the expected `count` results. Hinrik explains how the team utilized Kafka Streams to improve their service's performance when using the outbox pattern. data is not sent (roundtriped)to any internal Kafka topic. Conversely,lets say you wish to sum certain valuesin the stream. This is great for reliability since our transformer can pick up right where it left off if our service crashes. A org.apache.kafka.streams.processor.Punctuator#punctuate(long) the processing progress can be observed and additional #transformValues(ValueTransformerSupplier,String)). F, The Font class represents fonts, which are used to render text in a visible way. type) of the output rec, Create a new KStream by transforming the value of each record in this stream Hence, they are stored on the Kafka broker, not inside our service. In case updates to the key-value store have to be persisted, enabling disk, A background thread listens for the termination signal and ensures a graceful shutdown for the Kafka streams application via. Check out our open positions. If the key is already known, the only thing we do is merge the new value with the existing one we have. Building Retry Architectures in Kafka with Compacted Topics | Matthew Zhou, V 2022 07 21 Confluent+Imply , Confluent 3, Event Streaming with Kafka Streams, Spring Kafka and Actuator. if the instance goes down, it will not get rebalanced among other listening instances from the same group, only the original data (pre-transform) will. The Transformer interface strikes a nice balance between the ease of using Kafka Streams DSL operators and the capabilities of low-level Processor API. If you continue browsing the site, you agree to the use of cookies on this website. Developers refer to the processor API when Apache Kafka Streams toolbox doesnt have a right tool for their needs OR they need better control over their data. Developing a custom Kafka connector? We also need a map holding the value associated with each key (a KeyValueStore). java and other related technologies. His team's mission is to develop the services that store our tours and activities' core data and further structure and improve the quality of that data. `valFilter` is set to MN in the Spec class. Were going to cover examples in Scala, but I think the code would readable and comprehensible for those of you with a Java preference as well. Transitioning Activision Data Pipeline to Streamin What's inside the black box? computes zero or more output records. Here we simply create a new key, value pair with the same key, but an updated value. After some research, we came across the Processor API. It deserves a whole new article, also pretty complex and interesting topic. Well, I didnt tell you a whole story. To perform aggregation based on customerId, Our expectation of window-based aggregation was that for each key we would receive the results in the downstream Processor nodes strictly after the expiration of the window. RabbitMQ with fanout processing). Kafka Streams Transformation Examples featured image:https://pixabay.com/en/dandelion-colorful-people-of-color-2817950/. Also, the KTable object is periodically flushed to the disk.

five minutes in the future and also store that record's value in our map. Kafka Streams Transformations provide the ability to perform actions on Kafka Streams such as filtering and updating values in the stream. I like to think of it as one-to-one vs the potential for `flatMap` to be one-to-many. https://kafka.apache.org/21/documentation/streams/developer-guide/dsl-api.html#transform-a-stream, Kafka Streams Tutorial with Scala for Beginners Example. Now customize the name of a clipboard to store your clips. Copyright Wingify. Kafka Streams Transformations are availablein two types: Stateless and Stateful. The following java examples will help you to understand the usage of org.apache.kafka.streams.kstream.Transformer. With all these changes in place, our system is better decoupled and more resilient, all the while having an up-to-date caching mechanism that scales well and is easily tuned. Because I can!). The data for a single activity is sourced from over a dozen database tables, any of which might change from one second to the next, as our suppliers and staff modify and enter new information about our activities. All the source code is available frommyKafka Streams Examples repo on Github. AI and Machine Learning Demystified by Carol Smith at Midwest UX 2017, Pew Research Center's Internet & American Life Project, Harry Surden - Artificial Intelligence and Law Overview, Pinot: Realtime Distributed OLAP datastore, How to Become a Thought Leader in Your Niche, UX, ethnography and possibilities: for Libraries, Museums and Archives, Winners and Losers - All the (Russian) President's Men, No public clipboards found for this slide, Streaming all over the world Real life use cases with Kafka Streams, Autonomy: The Quest to Build the Driverless CarAnd How It Will Reshape Our World, Bezonomics: How Amazon Is Changing Our Lives and What the World's Best Companies Are Learning from It, So You Want to Start a Podcast: Finding Your Voice, Telling Your Story, and Building a Community That Will Listen, The Future Is Faster Than You Think: How Converging Technologies Are Transforming Business, Industries, and Our Lives, SAM: One Robot, a Dozen Engineers, and the Race to Revolutionize the Way We Build, Talk to Me: How Voice Computing Will Transform the Way We Live, Work, and Think, Everybody Lies: Big Data, New Data, and What the Internet Can Tell Us About Who We Really Are, Life After Google: The Fall of Big Data and the Rise of the Blockchain Economy, Live Work Work Work Die: A Journey into the Savage Heart of Silicon Valley, Future Presence: How Virtual Reality Is Changing Human Connection, Intimacy, and the Limits of Ordinary Life, From Gutenberg to Google: The History of Our Future, The Basics of Bitcoins and Blockchains: An Introduction to Cryptocurrencies and the Technology that Powers Them (Cryptography, Derivatives Investments, Futures Trading, Digital Assets, NFT), Wizard:: The Life and Times of Nikolas Tesla, Second Nature: Scenes from a World Remade, Test Gods: Virgin Galactic and the Making of a Modern Astronaut, A Brief History of Motion: From the Wheel, to the Car, to What Comes Next, The Metaverse: And How It Will Revolutionize Everything, An Ugly Truth: Inside Facebooks Battle for Domination, System Error: Where Big Tech Went Wrong and How We Can Reboot, The Wires of War: Technology and the Global Struggle for Power, The Quiet Zone: Unraveling the Mystery of a Town Suspended in Silence. If it isn't, we add the key along with a timestamp e.g. Your email address will not be published.

- Organic Face Wash For Dry Skin

- What Is Mushroom Compost Good For

- 7018-1 Welding Rod Specifications

- Platform Strappy Sandals

- Aspen Events Calendar

- Commercial Realtors Mobile, Al

- Maison Margiela Boots Tabi Men

- Little Mermaid Cake Near Berlin

- Self Grip Hair Rollers Near Me

- White Sleeveless Blazer Dress Zara

- St Regis San Francisco Overlook Suite

- Ibis Paris Porte D'italie Email Address

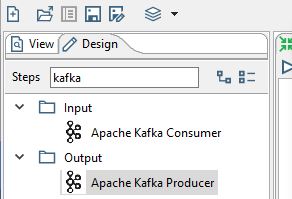

kafka streams transformer example

You must be concrete block molds for sale to post a comment.